In the past, students drawing silly pictures of their teachers or creating humorous images of their friends were the biggest concerns for schools. But now, schools are having to develop emergency plans in case sexually explicit deepfake images of students or teachers appear on social media.

Two recent incidents involved AI-generated deepfakes of school principals using racist and violent language. One was created by students, and the other was made by an athletic director who was later arrested.

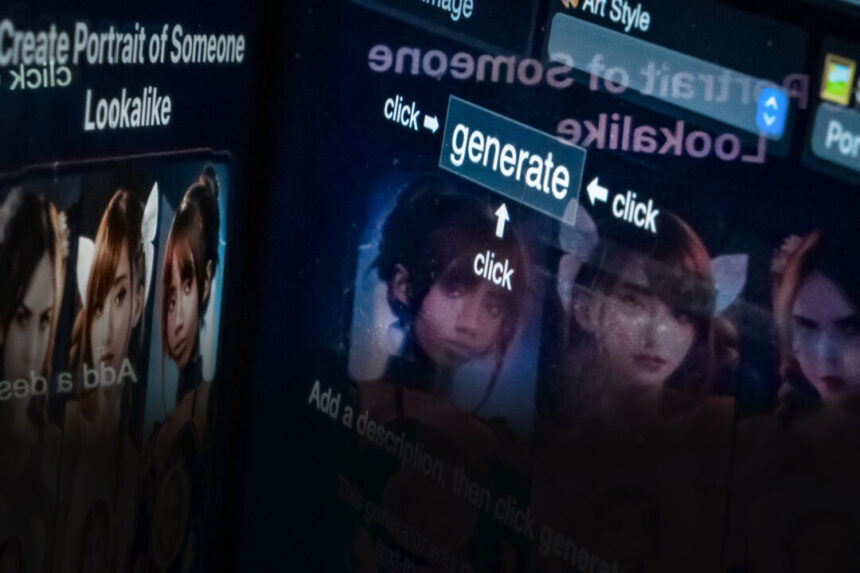

Deepfakes are AI-generated content that can be used for malicious purposes, such as creating fake sexual imagery or spreading misinformation.

Education leaders are struggling to find ways to prevent and respond to these deepfakes, as technology to combat them does not yet exist. Legislation is being proposed at both state and federal levels to criminalize the creation and distribution of deepfakes.

School districts are seeking guidance on how to handle these emerging threats, which not only affect students but also staff members. Incidents like fake audio recordings of principals have led to criminal charges and investigations involving law enforcement agencies.

As AI technology continues to evolve, there may be a need for new systems to monitor and safeguard against deepfakes in schools. Recent cases of students using AI to impersonate school officials highlight the importance of addressing this issue to protect students and staff members. Lyons expressed that her daughter was frightened by the incident, and that experiences such as school lockdowns or emergency drills still trigger anxiety and fear due to the 2023 deepfake. She mentioned, “Seventh graders should not have to worry about these things,” in an interview with The Epoch Times.

She added that although there have been no deepfake incidents reported this semester, students have made threats to each other on social media, leading to a two-hour building lockdown. Lyons expressed frustration over the lack of transparency in such situations.

The Epoch Times attempted to contact district offices in Carmel, New York, and Baltimore County, Maryland, but did not receive a response. The new law in California was influenced by several deepfake incidents targeting students.

Patrick Gittisriboongul, assistant superintendent for technology and innovation in the Lynwood Unified School District near Los Angeles, stated that their district has implemented a zero tolerance policy regarding deepfakes. This policy mandates personnel to notify law enforcement, provide support to victims, and have an AI incident response plan in place.

Moreover, the district restricts AI usage, utilizes content filters for online activities, and enforces guidelines for ethical technology use among students and staff. Gittisriboongul emphasized that proactive measures were taken due to incidents in other Southern California districts.

The Center for Democracy and Technology reported that during the 2023-2024 academic year, 40 percent of students and 29 percent of teachers were aware of deepfakes depicting individuals associated with their school. The report highlighted that most teachers had not received training on how to handle deepfakes, and their districts had not updated policies to address such incidents.

Additionally, a consortium of nonprofits launched the Campaign to Ban Deepfakes (CBD) to raise public awareness globally. CBD is circulating an online petition urging the criminalization of all deepfakes and holding technology developers and content creators accountable for their actions.

Furthermore, a California law firm specializing in education law released guidance on deepfakes ahead of the 2024–25 academic year. The firm advised school districts to establish AI policies, educate students on responsible AI usage, and be prepared to address off-campus actions that impact K–12 school communities.

A school would need to demonstrate that a student’s misuse of AI off-campus had a significant impact on the school in order to impose punishment.

Despite efforts to establish a global social norm, many students and school staff members remain at risk.

Educational leaders should educate school communities about the dangers and repercussions of AI-generated impersonations, clarifying that using this technology to harm someone’s reputation constitutes harassment, according to Siegl.

“Let law enforcement determine whether it’s a deepfake or not,” he suggested.

Please rewrite the sentence so that it is more concise and clear.

Source link