Ms. Mulveny emphasized the importance of adopting protective technologies like content credentials to rebuild trust in the digital content we consume.

An Adobe study revealed that a large majority of Australian voters are worried about the potential misuse of AI technology to spread misinformation through synthetic election ads. The findings showed that four out of five individuals are in favor of banning AI due to the difficulty in differentiating between fake and real information. Additionally, a significant number of people reported disengaging from social media to avoid encountering fake content.

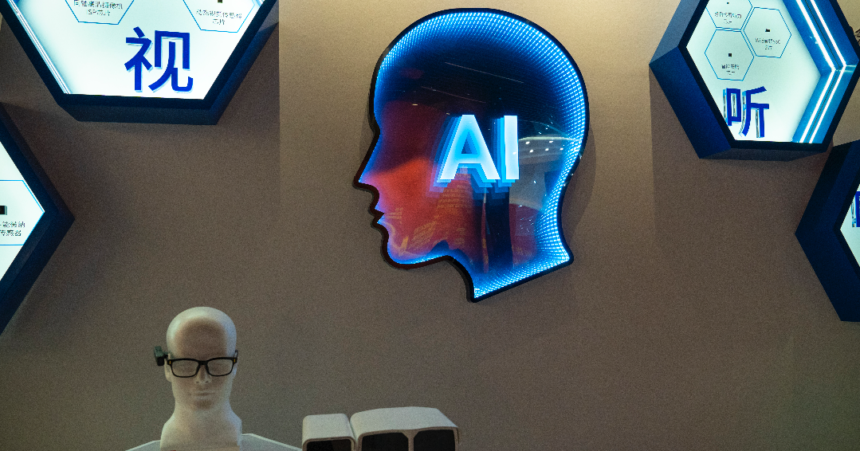

The use of AI-driven misinformation, particularly through deep fake technology, poses a significant threat to elections worldwide. This rapid dissemination of false information on social media platforms can greatly influence public opinion and democratic processes.

The “Future of Trust” Study conducted by Adobe surveyed 1,005 eligible voters in Australia and New Zealand. The results indicated that a majority of respondents believe that electoral candidates and parties should prohibit the use of AI, with concerns raised about the risks deep fakes pose to democracy.

Adobe’s Asia Pacific government relations director, Jennifer Mulveny, highlighted the need to enhance media literacy among consumers to identify harmful deep fakes and distinguish fact from fiction. She stressed the importance of utilizing protective technologies like content credentials to restore trust in digital content, especially with the approaching Australian federal election.

Unregulated Use of AI

In a notable incident in 2020, a conservative lobby group released a deep fake video depicting the then-Queensland Premier, Annastacia Palaszczuk, delivering a fake press conference. This raised concerns about the potential misuse of deepfake technology in spreading convincing disinformation in political campaigns.

Efforts to address the risks associated with AI-generated content include proposals to amend electoral laws to mandate labeling of such content. The lack of clear regulations on AI-created content has prompted discussions on the need for digital watermarking to identify artificially generated materials.

Regulatory bodies like the Australian Competition and Consumer Commission have conducted inquiries into misinformation and recommended reforms to enhance media literacy. The evolving landscape of AI-powered disinformation underscores the importance of proactive measures to detect and combat deceptive content effectively.

Can you please rewrite this sentence?

Source link