Everyone knows what the AI apocalypse is supposed to look like. The movies WarGames and The Terminator feature a superintelligent computer taking control of weapons in a bid to end humankind. Fortunately, that scenario is unlikely for now. U.S. nuclear missiles, which run on decades-old technology, require a human being with a physical key to launch.

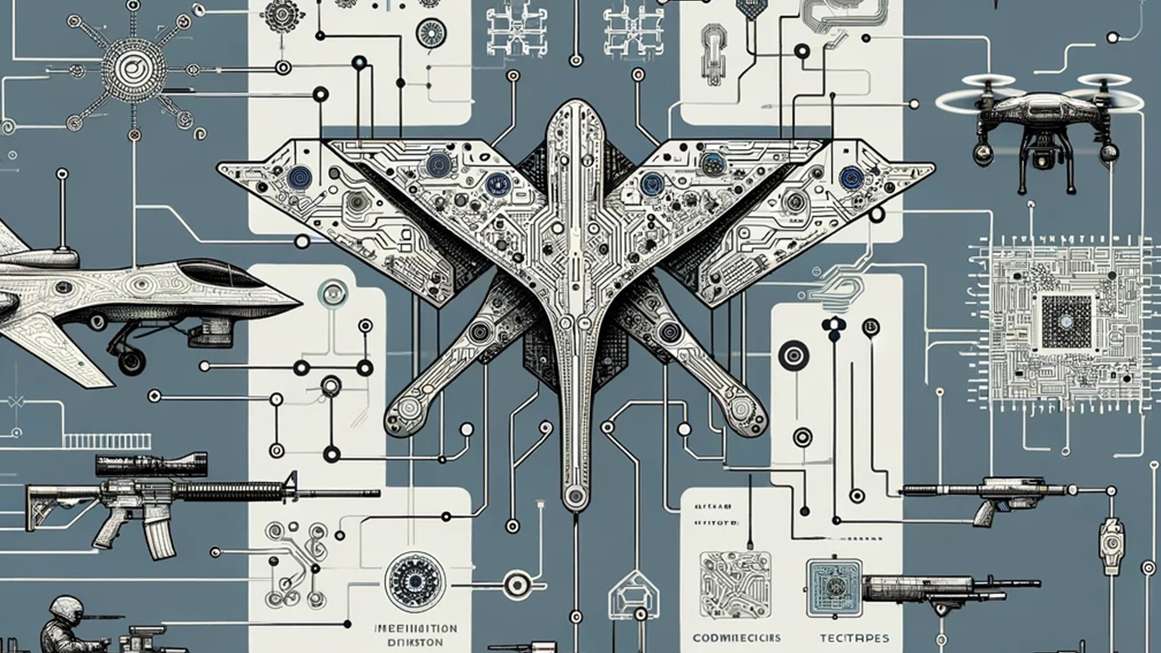

But AI is already killing people around the world in more boring ways. The U.S. and Israeli militaries have been using AI systems to sift through intelligence and plan airstrikes, according to Bloomberg News, The Guardian, and +972 Magazine.

This type of software has allowed commanders to find and list targets far faster than human staff could by themselves. The attacks are then carried out by human pilots, either with manned aircraft or remote control drones. “The machine did it coldly. And that made it easier,” an Israeli intelligence officer said, according to The Guardian.

Going further, Turkish, Russian, and Ukrainian weapons manufacturers claim to have built “autonomous” drones that can strike targets even if their connection to the remote pilot is lost or jammed. Experts, however, are skeptical about whether these drones have made truly autonomous kills.

In war as in peace, AI is a tool that empowers human beings to do what they want more efficiently. Human leaders will make decisions about war and peace the same way they always have. For the foreseeable future, most weapons will require a flesh-and-blood fighter to pull a trigger or press a button. AI allows the people in the middle—staff officers and intelligence analysts in windowless rooms—to mark their enemies for death with less effort, less time, and less thought.

“That Terminator image of the killer robot obscures all of the already-existing ways that data-driven warfighting and other areas of data-driven policing, profiling, border control, and so forth are already posing serious threats,” says Lucy Suchman, a retired professor of anthropology and a member of the International Committee for Robot Arms Control.

Suchman argues that it’s most helpful to understand AI as a “stereotyping machine” that runs on top of older surveillance networks. “Enabled by the availability of massive amounts of data and computing power,” she says, these machines can learn to pick out the sorts of patterns and people that governments are interested in. Think Minority Report rather than The Terminator.

Even if human beings review AI decisions, the speed of automated targeting leaves “less and less room for judgment,” Suchman says. “It’s a really bad idea to take an area of human practice that is fraught with all sorts of problems and try to automate that.”

AI can also be used to close in on targets that have already been chosen by human beings. For example, Turkey’s Kargu-2 attack drone can hunt down a target even after the drone has lost its connection to its operator, according to a United Nations report on a 2021 battle in Libya involving the Kargu-2.

The usefulness of “autonomous” weapons is “really, really situational,” says Zachary Kallenborn, a policy fellow at George Mason University who specializes in drone warfare. For instance, a ship’s missile defense system might have to shoot down dozens of incoming rockets and has little danger of hitting anything else. While an AI-controlled gun would be useful in that situation, Kallenborn argues, unleashing autonomous weapons on “human beings in an urban setting is a terrible idea,” due to the difficulties distinguishing between friendly troops, enemy fighters, and bystanders.

The scenario that really keeps Kallenborn up at night is the “drone swarm,” a network of autonomous weapons giving each other instructions, because an error could cascade across dozens or hundreds of killing machines.

Several human rights campaigners, including Suchman’s committee, are pushing for a treaty banning or regulating autonomous weapons. So is the Chinese government. While Washington and Moscow have been reluctant to submit to international control, they have imposed internal limits on AI weapons.

The U.S. Department of Defense has issued regulations requiring human supervision of autonomous weapons. More quietly, Russia seems to have turned off its Lancet-2 drones’ AI capabilities, according to an analysis cited by the military-focused online magazine Breaking Defense.

The same impulse that drove the development of AI warfare seems to be driving the limits on it too: human leaders’ thirst for control.

Military commanders “want to very carefully manage how much violence you inflict,” says Kallenborn, “because ultimately you’re only doing so to support larger political goals.”